Long story short: I made this thing.

Or to be technical, I made a thing that makes things like that thing. (If your device doesn’t render it, it looks like this.)

Backstory: a month and a half ago I left my job to make an indie game. One of the many things on my to-do list was “Learn shader programming”, and around that time I ran across this blog post by Roger Alsing about using genetic algorithms to create images. So I tried the same idea in 3D to experiment with GLSL.

Basically I run a pseudo-genetic algorithm on triangles in 3D space while comparing their 2D projection to a target image. The result is a chaotic bunch of polygons that happen to look like the target, but only when viewed from just the right angle.

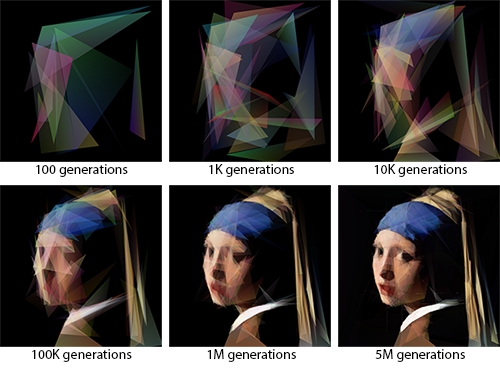

Sample results:

Comparisons run on the GPU so it’s reasonably fast - 1M generations takes about 10 minutes for me, but it varies by target image and polygon count.

Make your own:

Open the example client and uncheck “Paused”. To change the target image just drag a local file onto the page. (Note: might not work on older video cards.)

About the algorithm:

Conceptually, the algorithm is extremely simple:

- Generate random data to serve as vertex positions and colors.

- Render the data as triangles.

- Compare the result to a reference image and generate a score for how closely they match.

- Randomly perturb the data, generate a score for the result, and keep the new data if the score has gone up.

- Repeat step 4 until the output is interesting enough to write a blog post about.

That’s it, nothing fancy. What’s (possibly) interesting is that all the heavy work is done on the GPU, including the step of comparing the candidate image to the reference. I wanted to keep things fast and I wanted to try out GPGPU, so I wrote shaders to do the comparison on hardware.

Specifically, there are two comparison shaders. The first takes two input framebuffers and outputs a score for how similar they are, and the second takes in the output of the first and averages the values. Both shaders, however, reduce the total number of pixels by a factor of 16, by looping over a 4x4 square of input pixels for each output pixel. So I run the first shader once, then the second repeatedly until the data is down to a trivial size, then read the results back into Javascript.

One wrinkle with all this is that apparently WebGL does not support reading floats from the GPU, only integers (which are clipped to the range 0-255). Some have cleverly worked around this by encoding floats on the GPU, but to keep things simple (too late!) I treated the RGB channels like a three digit decimal in base 256, which seemed to be plenty of resolution to detect even small changes in image similarity.

Performance and tweaks

When I started this I expected that finagling with the implementation might greatly affect the performance. However, profiling showed that the time spent waiting to read data off the GPU (even a single pixel) dominates everything else by a factor of 5-10. So I feel reasonably confident that the only way to significantly speed up generations would be to run several at once, reading back several results at a time. With that said, improving the “genetic” part of the algorithm could probably make it converge to better results in fewer generations. (I haven’t tried this - I’m learning GLSL here dammit, not GP!)

Source etc.

GLSL-Projectron on github. Issues and pull requests welcome. The core library that does all the solving is a requirable module, and the docs folder has a sample client app that provides the UI.

updates

- 2021: Fixed links and removed “Lena” as a test image. Better late than never - that’s my motto.